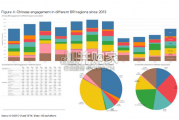

China’s financing and investment spread across 61 BRI countries in 2023 (up...

2024-02-27 31 英文报告下载

47% of these implementations are based on PyTorch vs. 18% for TensorFlow. PyTorch offers greater flflexibility and a dynamic computational graph that makes experimentation easier. JAX is a Google framework that is more math friendly and favored for work outside of convolutional models and transformers. Empirical scaling laws of neural language models show smooth power-law relationships, which means that as model performance increases, the model size and amount of computation has to increase more rapidly.

PolyAI, a London-based conversational AI company, open-sourced their ConveRT model (a pre-trained contextual re-ranker based on transformers). Their model outperforms Google’s BERT model in conversational applications, especially in low data regimes, suggesting BERT is far from a silver bullet for all NLP tasks.GPT-3, T5, BART are driving a drastic improvement in the performance of transformer models for text-to-text tasks like translation, summarization, text generation, text to code.

标签: 英文报告下载

相关文章

China’s financing and investment spread across 61 BRI countries in 2023 (up...

2024-02-27 31 英文报告下载

Though the risk of AI leading to catastrophe or human extinction had...

2024-02-26 52 英文报告下载

Focusing on the prospects for 2024, global growth is likely to come i...

2024-02-21 96 英文报告下载

Economic activity declined slightly on average, employment was roughly flat...

2024-02-07 67 英文报告下载

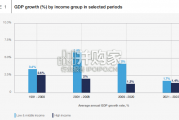

Economic growth can be defned as an increase in the quantity or quali...

2024-02-06 82 英文报告下载

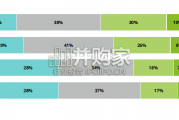

In this initial quarterly survey, 41% of leaders reported their organizatio...

2024-02-05 66 英文报告下载

最新留言